Congress has recently proposed multiple bills to regulate the internet (such as the recent “Kids Online Safety Act”), in the name of protecting children. This has caused a simple response: “Just have parents be parents and set up parental controls if they care.”

That’s simpler said than done. Parental controls right now completely suck by being incomplete, full of loopholes, extremely buggy, overly complicated, poorly designed, privacy invading, or a combination of the above. As someone who has used almost every ecosystem, here’s an eye opener.

Also here’s my rallying cry: If you don’t want Congress regulating the internet to “protect the Kids,” demand companies fix their parental controls.

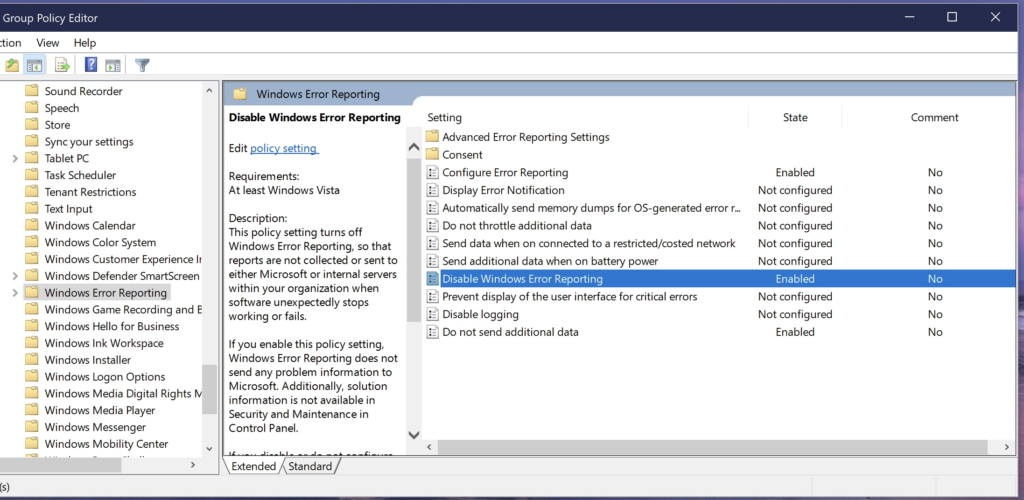

Windows 11

Microsoft has Family Safety as their tool for parental controls on Windows. Here’s my hate list.

- Every child must have a Microsoft Account to use it. Even the 8-year-olds. Local accounts are still supported by Windows 11 after setting up the main account, but you can’t use parental controls with them.

- You can’t disable Windows Copilot, or Edge Copilot. Don’t want your kid using AI from Bing or ChatGPT when they are just learning to write? Too bad. Don’t want them using AI for any host of ethical or personal issues? Too bad.

- You can’t disable the Microsoft News feed of tabloid content on new Microsoft Edge tabs, without disabling Bing (rendering you unable to offer restricted “SafeSearch” browsing of any kind, to your child, at all).

- You can’t disable the Microsoft Search button, which shows… surprise, more tabloid content. Even if you have your child’s account set to “allowed websites only.” They’ll still see headlines of the day about how the world is on fire.

- You can’t disable the Widgets button. The entire purpose of widgets is to serve tabloid content. And once again, “allowed websites only” doesn’t disable that or do basically anything to it.

- Windows 11 comes preloaded with Movies+TV, Xbox, Microsoft News, and other apps. Unless you as a parent know about these apps, open these apps individually so they appear in the dashboard, and then block these apps, they’re allowed. What?

- You can’t disable the Microsoft Store. You can set age restrictions; so setting everything to block all apps rated above the Age of 3 is the best you’ll be able to do.

- Microsoft Office lets you embed content from Bing, and does not respect system parental controls… at all. Even if you block Bing, your 8-year-old can still watch videos to their heart’s content by embedding them in a Word document, because the internet filters only work in Microsoft Edge.

So let’s say you want to allow your 8-year-old to work on school, on a Windows PC. What’s the point of parental controls when you can’t disable Widgets, can’t disable the tabloid content in the Search box even if you block Bing, the Bing filter can still be bypassed using Office, and your student can use AI all they want? These are “parental controls”?

MacOS / iOS

- Quite powerful, stupidly buggy. So much so that Apple even admitted as such to a reporter a few months ago. There is, in my experience, no part of macOS more buggy than Screen Time. How so?

- It appears that Screen Time runs using a daemon (background process) on the child’s account. That daemon has a propensity to randomly crash after running for a few hours. When it does, all locks are disabled. Safari will just let you open Private windows without filtering of any kind, regardless of your internet filtering settings, when that happens. This state then remains until the child logs out and logs back in. Naturally, what’s the point of parental controls that randomly fail open?

- The “allowed websites only” option shows Apple has never used this feature, ever. You will be swarmed on your first login with prompts to approve random IP addresses and Apple domains because of system services that can no longer communicate with the mother ship. And they will nag you constantly with no option to disable them, so your first experience of enabling this is entering your PIN code a dozen or more time to approve all sorts of random junk just to get to a usable state.

- The “allow this website” button on the “website blocked” page randomly doesn’t work; and this might be because the codebase is so old (and likely untested for so long), it’s not even HTML5. Meaning it’s probably been, what, a decade and a half since anyone really looked at it last?

- You can’t disable any in-box system apps. You don’t want your kid reading through the Apple Books store? You don’t want your kid seeing suggestive imagery and nudity in the Apple Music app? You don’t want your kid listening to random Podcasts from anyone in the Apple Podcasts app? You can’t do anything about it but set a time limit for 1 minute. Of course, that time limit randomly doesn’t work either.

- The “allowed websites” list in macOS has a comical, elementary bug showing how badly tested the code is. If you open the preference pane, it shows a list of allowed websites (say, A, B, and C). Let’s say I add a website called D, and close it. I open the preference pane again – only A, B, and C are in the list, D nowhere to be seen! However, D was added to the system, but it’s not in the list. If I go add E (so the list is now A, B, C, and E); D will then be removed and opening the preference pane will one again just show A, B, and C.

Nintendo Switch

I generally like Nintendo, but the Nintendo Switch Parental Controls are inexcusable.

- Every parental control is per-device. What about families that have, say, multiple children and can’t afford (or don’t want the risk) of having multiple Switches? Seems reasonable, but no, every Switch is personal to the owner if you use Parental Controls.

- Let’s say you then go, fine, and buy multiple Switches. There’s no ability to set a PIN lock; so theoretically the kids could just… swipe the other’s Switch?

- You can’t hide titles on the menu. Let’s say you have two kids on the same Switch. One plays M-rated titles, the other plays E-rated titles. The kid who plays E-rated titles will see all the M-rated titles on the Home Screen, and nothing can be done about it. They can even launch them and play them.

- You can’t disable the eShop from within the Parental Controls app. You can dig through the eShop settings to find the option to require a password before signing in, but that requires the kid to not know their own Nintendo password. If your kid uses, say, their actual email address on their Nintendo account, locking down the eShop is impossible – and they’ll see every game for sale regardless of how appropriate or inappropriate it is (Hentai Girls, Waifu Uncovered, anyone? Games you can “play with one hand”? Actual titles on the eShop).

Router based filtering

One of the best solutions. Unfortunately,

- Every child must have their own device (again, poor families need not apply, making this very financially uninclusive solution).

- Often, technically overwhelming for parents. What IP address is that kid’s laptop again?

- Premium routers like Eero, which have very easy to use router-based parental controls, often demand subscriptions to use them. In the case of Eero, $9.99/mo. after you already paid ~$200 for the devices. That’s not a viable solution – I can’t tell a poor family to pay $200+ for routers and a $9.99/mo. subscription as their solution.

- Some routers have parental controls that make me wonder what idiot thought this would work. Case in point – a Netgear router from a few years ago that let me “block websites” and had “parental controls” right on the box. Cool – but they worked by letting a parent individually enter, domain by domain, websites to block. Considering the internet’s scale, that’s criminally useless and if I had a lawyer, I would’ve sued for false advertising.

- Remember the Nintendo eShop? Try using router-based parental controls to block eShop access without blocking software updates or online play. Good luck with that. Router-based blocking has the least nuance of any of the above solutions.

But sure. It’s the parent’s job to set up parental controls. My response is, once again, if you don’t want Congress regulating the internet, parents need better tools than these.